👋 Hi, I’m Ramin.

There’s a good chance you’ve never heard of me, but I’ve been around, quietly building things for a long time.

Obligatory bio: Graduated high school at 15 with O-levels. Studied CompSci, English Lit, and Philosophy at university. Decades in Silicon Valley. A dozen technology patents awarded and pending. Here's my CV: https://ramin.work.

Not that any of it matters. Opinions are all my own and only mine. Fair warning: likely off on many of them.

This series came out of several decades of working, watching, and thinking about what one of my heroes, John McCarthy coined as Artificial Intelligence.

You should know: I am not a computer researcher. I’m a professional developer and software architect, a learner, teacher, and builder.

Technology is a magnificent tool for expanding and helping humanity. Unfortunately, it can also cause grievous harm, especially at scale. We’ll get to all that later.

My work has been focused on pragmatics—the nuts and bolts of application development. But my background and interests have always been in the humanities, especially literature, philosophy, and storytelling, with a focus on understanding where ideas fit. Artificial Intelligence in the mid-80s was a combination of all these, synthesized together. It was the original STEAM before anyone called it that.

By the late 1980s, things quickly devolved. We hit what came to be known as the Second AI Winter and all funding dried up. I moved on to working on Industrial and Consumer products: the Internet, Man-Machine Interfaces, and application development. I was lucky to be working in the San Francisco Bay Area and Silicon Valley during those exciting times, feeling like Zelig at the periphery of historical events.

Somewhere along the line, I ended up building a couple of browsers, moved into the world of mobile applications, and then connected hardware. Ten years ago, I fell back onto AI Assistants and their second cousin, wearables. I bring this up because I believe edge computing and smart hardware are the only ways to solve intractable problems in privacy, cost, and genuinely personal Humanist AI.

We’ll get to all that.

Much of my work has been as a consultant around Silicon Valley, hopping from project to project. This allowed exposure to a wide range of technologies, everything from the web front-end to mobile, server back-end, serverless cloud, embedded firmware, and robotics, with a dose of soldering and bodge-wiring thrown in.

A few times, I tried my hand at founding companies (with mixed results). I’ve helped build and ship apps that have been featured on every mainstream app store (Mac, Windows, iOS, Android, and Apple Watch).

Along the way, to quote Liam Neeson in Taken:

Feel free to add a "master of none."

I know my lane.

In 2019, I ended up full-time at Amazon’s Lab126, working on Alexa devices and poring through every line of its code. After that, I moved to AWS R&D, working at the intersection of devices, user interfaces, and large-scale architectures. Later, at Panasonic R&D, I did a lot of thinking about where AI Assistants could go next, starting with helping the elderly and people with different abilities, and ended up filing a few related patents.

My conclusion: Think of users first.

This might seem obvious, but if you look at many products, their primary benefits redound to some other entity. It’s easy to lose sight of benefiting users and end up in a state of what Cory Doctorow calls enshittification.

I’ve been through multiple Silicon Valley crashes and at least one AI Winter. I hope this current bubble doesn’t end up in a bad place. At some point, expectations and reality will hopefully sync up, and we will end up with something useful that makes life easier for everyone. It’s happened before, and it will happen again. Like Fichte’s Dialectic: Thesis, Antithesis, and finally, Synthesis. Until then…

I started writing this series because a loud nagging voice inside me kept yelling that I should, until I could no longer ignore it.

In any other knowledge domain, we would be learning from the hard-earned lessons of those who came before us, rather than hitting reset and starting all over every 5-10 years, or hype cycle to hype cycle.

In bridge construction, there’s an accumulated knowledge of how to build a decent, functional bridge. More importantly, humanity has learned what NOT to do so these structures don’t come tumbling down. That is how we truly advance, by remaining curious and showing humility.

Same with medicine, automotive, farming, or psychology. People learn and build on the mistakes and lessons of previous generations. We would do well in the software world to do the same.

The world of AI didn’t materialize because GenAI showed up. ELIZA did it back in the 1960s. It just didn’t do it as well and used different plumbing, appropriate to the period. The impact ELIZA had on the direction of research was significant (despite the creator’s misgivings— we’ll cover all that in the next section). The enthusiasm led to promises of General Intelligence ‘just a few years from now.’ That legacy continues nearly 60 years later, guised as AGI, or Personal SuperIntelligence.

Sound familiar? None of this is new.

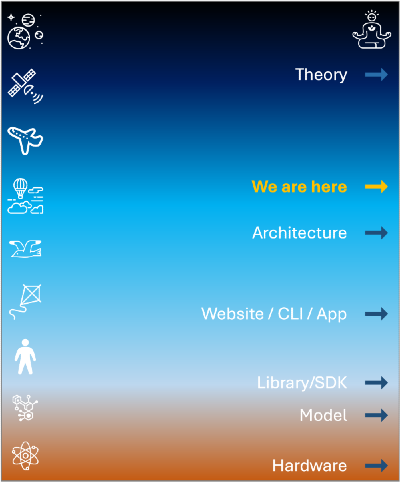

My goal for this series is to pitch the technical content at an abstraction level between pure theory and low-level hardware, hopefully keeping it accessible to a broader audience. Think of it like this:

Occasionally, we’ll dip into the lower layers, but I will do my best to provide context on where they fit.

When it comes to AI, I am neither a fist-shaking doomer, nor a starry-eyed Pangloss. I am what you might call a Pragmatic Optimist, and very much aligned with E.M. Forster’s notion of Humanism.

I believe it is more useful to create long-lasting technical architectures with purpose. These should remain stable, regardless of the latest fads, but more importantly, build toward an agreed, common goal.

Think of me as a nobody with a lot of ideas. By the end of this series, my goal is to show how the next version of AI can truly help humanity, instead of plunging headfirst into a future not unlike The Machine.

This is my manifesto for a Humanist AI and eventually, an alternative to the Turing Test.

I thank you for your attention and invite you to subscribe and stick around for the ride.

It should be fun.